0. 실행 환경

AWS t2.xlarge

OS : Red Hat Enterprise Linux 8.6

1. 설치하기

1) Java 설치

yum update

sudo yum install -y java-1.8.0-openjdk

java -version2) SSH 설정

ssh-keygen -t rsa -P ''

cat /home/ec2-user/.ssh/id_rsa.pub >> /home/ec2-user/.ssh/authorized_keys3) Hadoop 다운로드

# 다운로드

wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.4/hadoop-3.3.4-src.tar.gz

#압축 풀기

tar xvzf hadoop-3.3.4.tar.gz

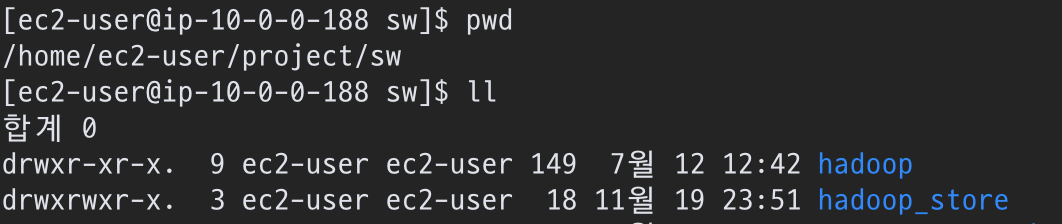

# hadoop으로 이름 변경하여 옮기기

# HADOOP_HOME=/home/ec2-user/project/sw/hadoop

mv hadoop-3.3.4 /home/ec2-user/project/sw/hadoophttps://hadoop.apache.org/releases.html

4) 환경 설정

4-1) 환경 변수 설정

sudo update-alternatives --config java

-> 출력되는 디력토리 복사vi ~/.bashrc아래 내용 추가

##HADOOP VARIABLES START

export JAVA_HOME=</copied/javahome/path>

export HADOOP_INSTALL=

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib"

##HADOOP VARIABLES END

source ~/.bashrc4-2) hadoop-env.sh 수정

vi <HADOOP_HOME>/etc/hadoop/hadoop-env.sh

아래 수정(53줄)

export JAVA_HOME = <JAVA_HOME>

4-3) core-site.xml 수정

vi <HADOOP_HOME>/etc/hadoop/core-site.xml

<!-- <configuration> </configuration> 을 아래처럼 수정 -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>4-4) yarn-site.xml 수정

vi <HADOOP_HOME>/etc/hadoop/yarn-site.xml

<!-- <configuration> </configuration> 을 아래처럼 수정 -->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shffle</value>

</property>

<property>

<name>yarn.nodemanager.aaux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShffleHandler</value>

</property>

</configuration>4-5) mapred-site.xml 수정

vi <HADOOP_HOME>/etc/hadoop/mapred-site.xml

<!--(mapred-site.xml이 없으면 mapred-site.xml.template 을 복사해서 수정)-->

<!-- <configuration> </configuration> 을 아래처럼 수정 -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>4-6) hdfs-site.xml 수정

# hadoop_store 디렉토리 생성

(hadoop이 위치한 디렉토리(/home/ec2-user/project/sw/)에 )

mkdir -p /home/ec2-user/project/sw/hadoop_store/hdfs/namenode

mkdir -p /home/ec2-user/project/sw/hadoop_store/hdfs/datanode

vi <HADOOP_HOME>/etc/hadoop/hdfs-site.xml<!-- <configuration> </configuration> 을 아래처럼 수정

</home/my/dir/datastore> 수정하기!-->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:</home/my/dir/datastore>/hdfs/namenode

</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:</home//my/dir/datastore>/hdfs/datanode

</value>

</property>

</configuration>5) Hadoop 파일 시스템 포맷

hdfs namenode -format6) Hadoop Single Node Cluster 실행

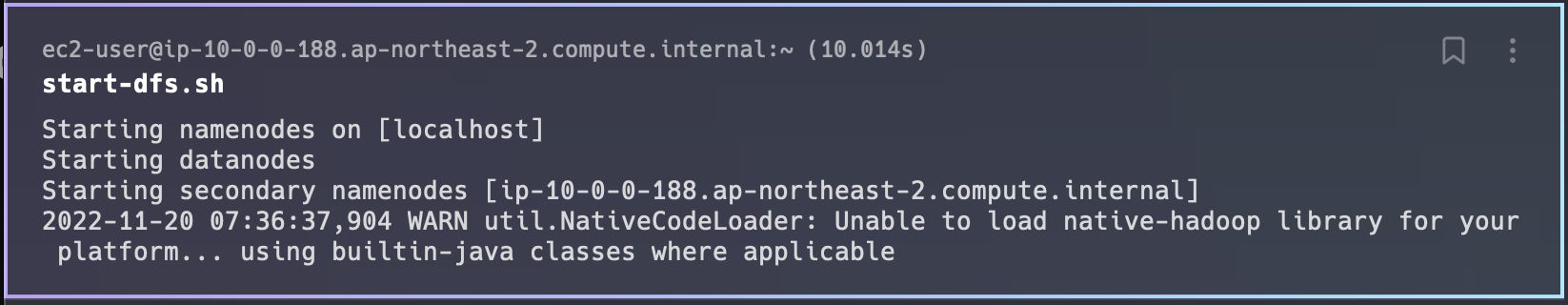

start-dfs.sh

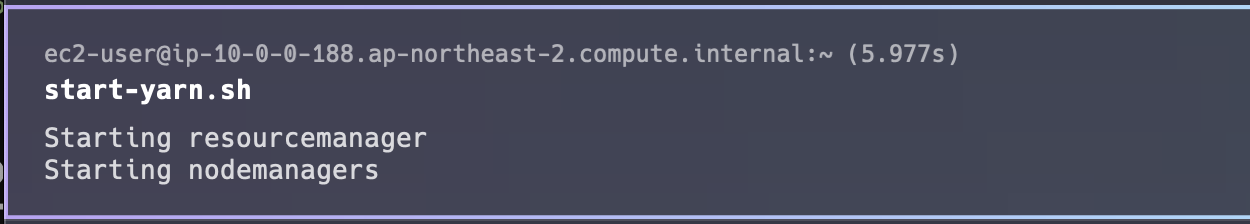

start-yarn.sh

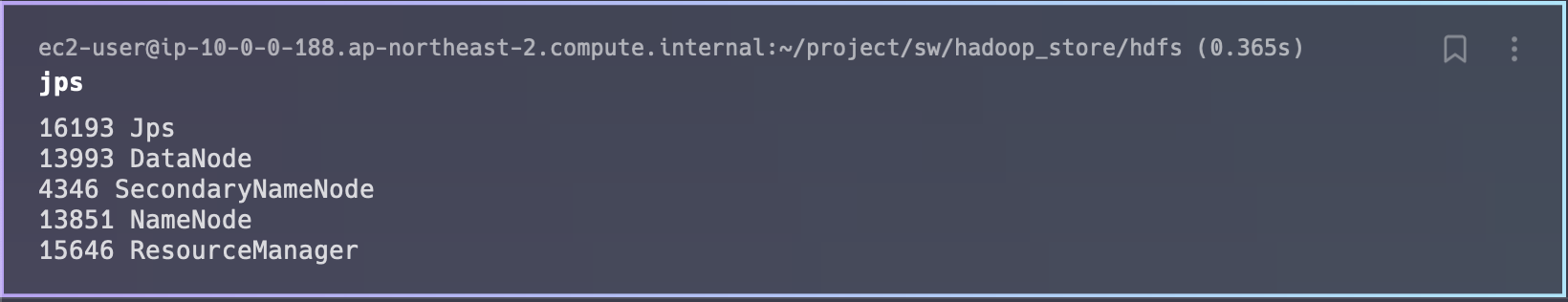

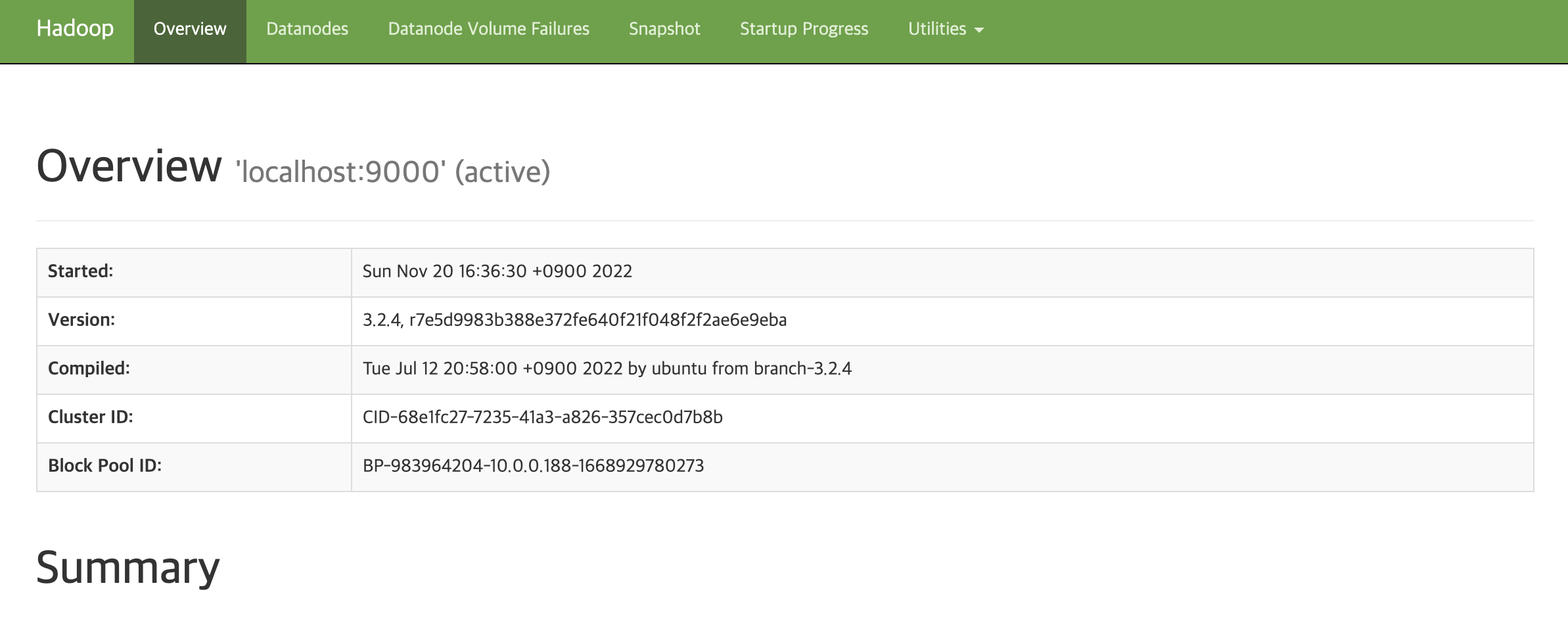

7) 확인

jps

localhost:9870 접속

'Data > Data Engineering & Analystics' 카테고리의 다른 글

| [Hive] Redhat8에 Hive 설치하기 (2) | 2022.11.20 |

|---|---|

| [MySQL] Redhat8에 MySQL 설치하기 (0) | 2022.11.20 |

| [Podman] RHEL8에 Podman 설치하기 ( + Podman compose) (0) | 2022.11.20 |

| [Kafka] Podman compose으로 Kafka 실행 (0) | 2022.11.20 |

| [ERROR] Hadoop Datanode 실행 안됨Initialization failed for Block pool <registering> 0. Exiting (0) | 2022.11.20 |